DIVENET: Dive Action Localization and Physical Parameter Extraction

for High Performance Training

Abstract

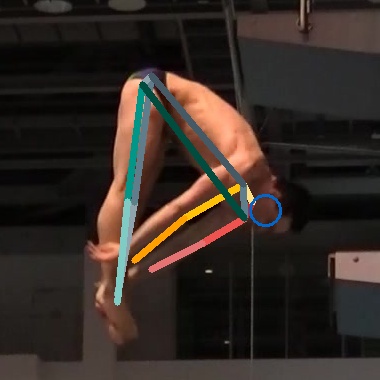

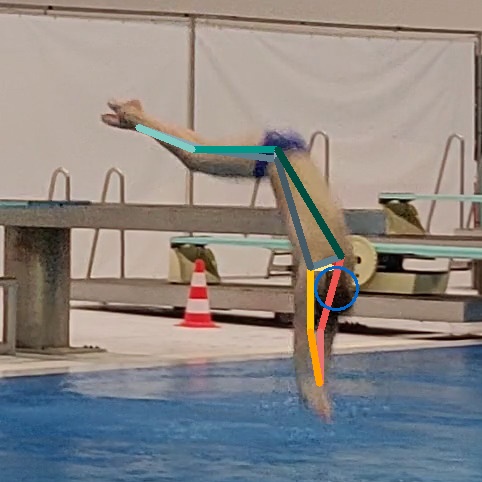

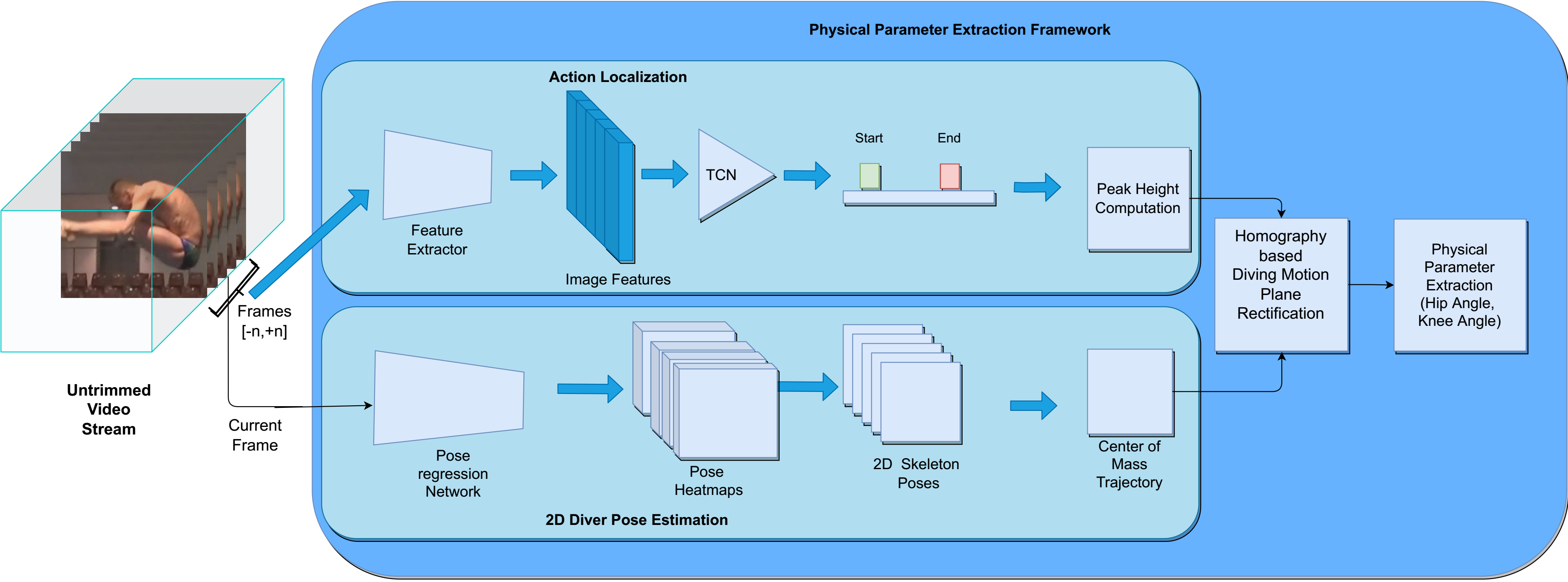

There has been rapid advances in human activity classification using deep convolutional neural networks. The deep convolution neural networks has also shown promising results on the classification of various sports activities. However, the accurate localization of a particular sports event or activity in a continuous video stream is still a challenging problem. Also, the accurate detection of sports action enables comparison of different performances in objectively. In this work, we focus on detection of springboard diving sports in an unconstrained environment. We present a learned Temporal Convolution Network (TCN) over a backbone feature extractor to accurately localize diving action in videos. We measure the localization accuracy of diving action by detecting the action video within a single frame latency (< 25ms) in a 60 Hz video. We recorded a monocular diving video dataset consisting of 450 video clips of different athletes diving from varying springboard heights [3m, 5m, 7.5m, 10m]. We estimate the peak dive height of the body center of mass (COM) using the temporal demarcation provided by the action localization step via the obique throw formula. In addition, we train a pose regression network to track and estimate the diver's 2D body poses. Further, we propose a method to compute a homography between the diving motion plane and image-view for each dive in order to accurately compute the movement of physical parameters such as COM position, hip and knee joint angles. By taking 4 points from image space and aligning it with real parabolic trajectory, we develop a method to rectify the image-view to a front-parallel orthographic projection view for a accurate physical parameter extraction. In the end, we reconstruct the complete diving motion real trajectory using our low latency accurate localization and pose estimation framework. We evaluate the trained model and achieve an accuracy of 95 to localize diving actions over untrimmed test video sequences. The pose regression model shows competitive results on publicly available 2D pose in the wild datasets and Diving dataset. We present ablation studies on the impact of backbone feature extractors, optimal temporal context and superiority of TCN over GRU networks.

DEMO VIDEO (coming soon)

Citation

@inproceedings{murthydivenet2023,

title = {DIVENET: Dive Action Localization and Physical Parameter Extraction for High Performance Training},

author = {Murthy, Pramod and Taetz, Bertram and Lekhra, Arpit and Stricker, Didier},

booktitle = {IEEE Access},

year = {2023},

url = {http://divenet.dfki.de},

doi = {10.1109/ACCESS.2023.3265595}

}

Acknowledgments

We would like to thank our colleagues at DSV and IAT for providing support in data gathering. We are extremely grateful to Dr. Thomas Köthe for sharing his expertise, insights and comments throughout our work. This work was supported by Federal Ministry of Education and Research (BMBF), Germany under the project DECODE with grant number 01IW21001 and partly funded by German Swimming Federation (DSV).